Introduction

Convolutional Neural Network (CNN) can do a lot of things that were previously considered almost impossible (I am taking about 1960's here). After the invention of CNN for digit recognition by Yann Lee Cunn et al., deep learning and Machine learning have gained a tremendous boost in almost every nook and corner of IT industry. Machine Learning is itself a very old topic, but earlier, there were no computational power to manifest such enormous computation and store such huge quantities of data. Due to the growth of hardware technologies (and constant growth by Moore's Law), there is a huge demand for this subject in recent industry. A person who is qualified enough to solve Machine Learning tasks are paid almost double, to those who are just software engineers. Almost every IT department seeks an analyst who can crunch information from the data to predict the future and make the company aware of the policies that it needs to make. One of the greatest development in the field of Machine Learning is image recognition. Though there are several algorithms which focusses on playing of games and particular use of deep reinforcement learning, our experiment explores the concept of a rather simple model of automating games. The model that we have used is developed over the years by eminent researchers by trial and errors. We have tweaked some of the parameters and shown that just by seeing and learning, the computer can perform a lot of tasks which can automate many things in day to day life.

A CNN when applied in image recognition, acts on an image. That is, if we want to apply something using CNN into video, we have to extract the frames, and work on it. The CNN might take about 0.4 second to do the job, then it is still a very bad algorithm, since there are 60 frames in one second and to act on real time systems, it shall be able to do atleast 30 operations per second. Though scientists are working consistently on this matter of improving the efficiency of algorithms which can be embedded and shall be working on very low powered devices [1].

Our Approach

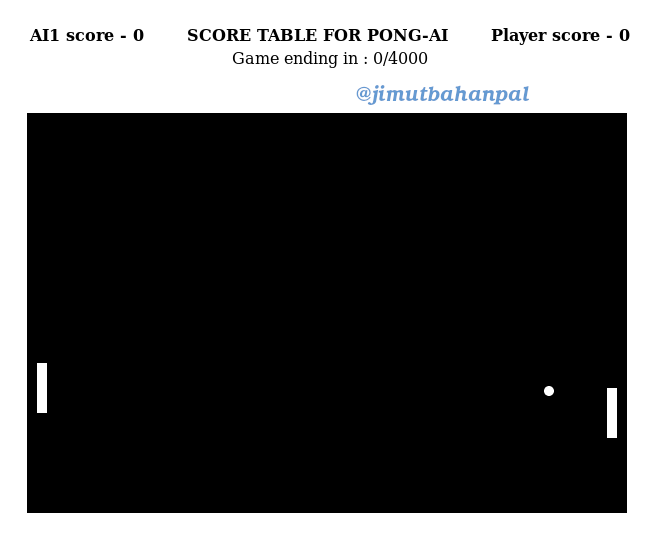

Here, we will design and talk about those games (a certain class of games which are extremely simple to play) which will can be played automatically, and will see what is the exact power of CNN in solving and automating tasks. There are rather very advanced models like the one which plays Go (alpha go), and others which uses advanced concepts of machine learning. The question was "Can CNN play pong game?". Firstly we have designed and used pong game, designed by Harrison and applied it to our version. The answer lies by asking ourself another question, i.e., can we predict the next move of the player when we are playing one to one with computer by just looking at a static image? as shown in Fig. 1.

Fig. 1: Which direction (up or down?) shall the right paddle move?

Fig. 1: Which direction (up or down?) shall the right paddle move?

The answer is NO, we can't predict the move of the Pong by just looking at the image. But, if we are given that the ball is moving towards the player (right paddle), then we may assume that the player might want to go down (just think of an invisible ray coming from the ball and hitting the wall), else, if the ball goes to the direction of the computer, then the player has the tendency to go upward direction (or may be stationary, though it completely depends on how the player is going to act then ). Due to the fact that we are unaware of the direction of the ball, we may sometime move the paddle "Up" or sometime move the paddle "Down". We might even ignore the cases when the ball is moving away from the player to the computer, since it may be a waste of computation (the AI needs to act only when the ball is coming towards itself). This thing can be sucessfully modelled by using reinforcement learning or some variations of LSTM (Long short term memory model). Our aim is to explore only those subsets of game which are easily and sucessfully played by CNN.

Nevertheless, we have made it play, which almost saves the ball from hitting the wall many times, but it doesn't (or rarely) makes score against the Robot computer (since we have tested it with simple CNN model). The video of the performance of the AI is shown in Video 2. The poor performance of the AI is due to the fact that for making the moves, we need to consider other pictures too (i.e., at least some previous pictures).

Tutorial

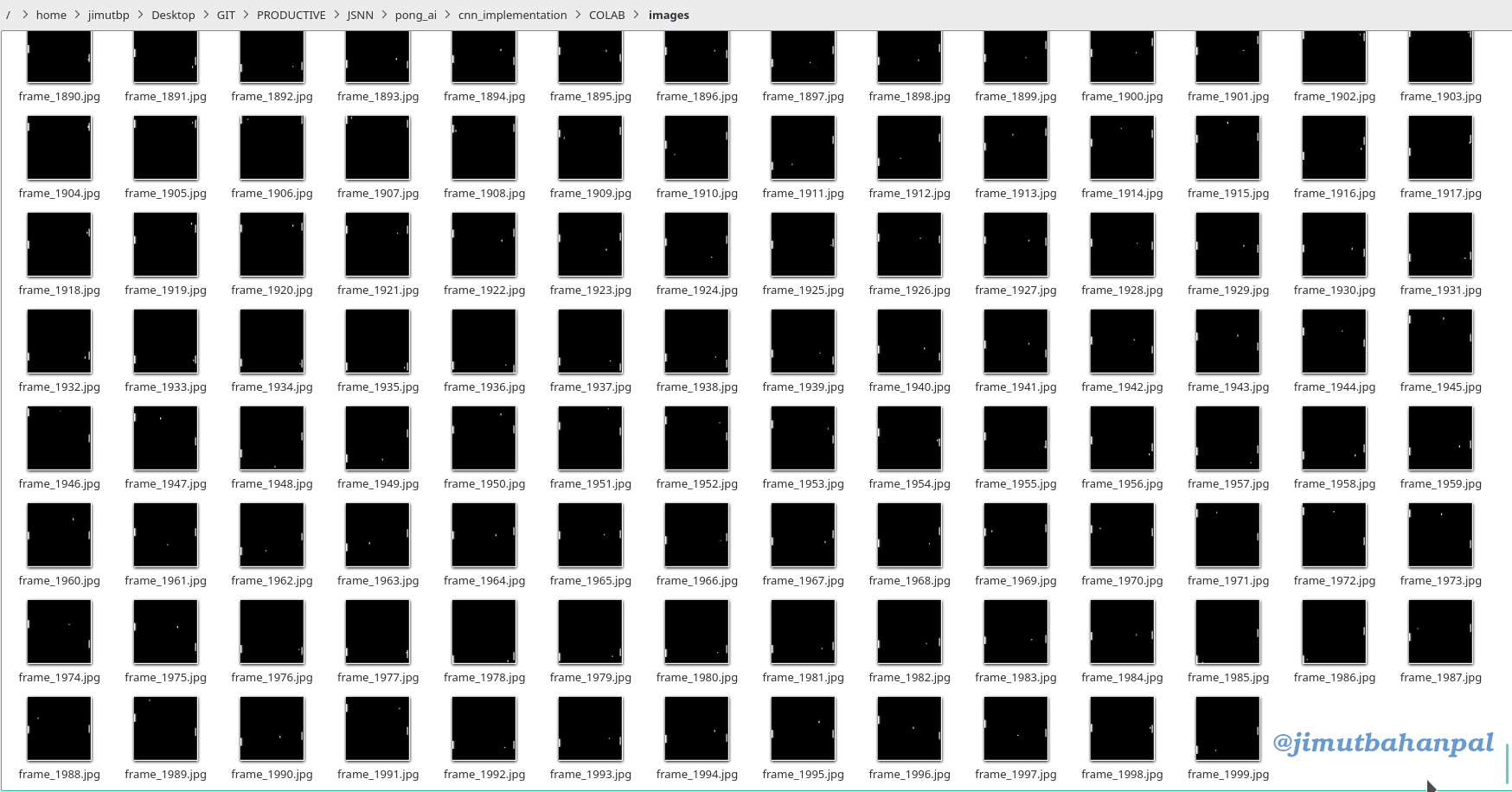

Let's see how we have done this using Python 3. Firstly we need to build a system which can collect the data for the training of the AI. For a CNN to get trained, we need labelled data. For this, we have written a script. The purpose of this script is it takes the action as one hot encoded value (i.e.,a map of number corresponding to the actions), and stores the actions in a CSV (comma separated value) file. The line number corresponds to the frame of the images, and we collect the corresponding image and store it in a different folder. The images obtained are shown in Fig. 2. Unlike Harrison, we have used the "human touch", by not training it with Robot Pong AI (which calculates the move with the help of certain mathematical constraint and then acts accordingly). The human touch can help the AI do incredeble things, like increasing the speed of the paddle by consistently pressing one button, but in the case of the AI which is trained using the Robot, it would look like the AI is acting optimally everytime by using mathematical constraint, which means it can't do the miracle. In other words, the AI can't take decisions like speeding up when the ball is coming at a high pace towards the paddle, and will be unable to save the ball. By looking at the video we cannot determine whether it is the AI or the human that is playing, since the AI learns from human and this makes any machine learning AI to perform tasks that the human can perform exactly the same way.

Script 1: A script in Python3 to create the data for training. Fig. 2: The images collected for training.

Fig. 2: The images collected for training.

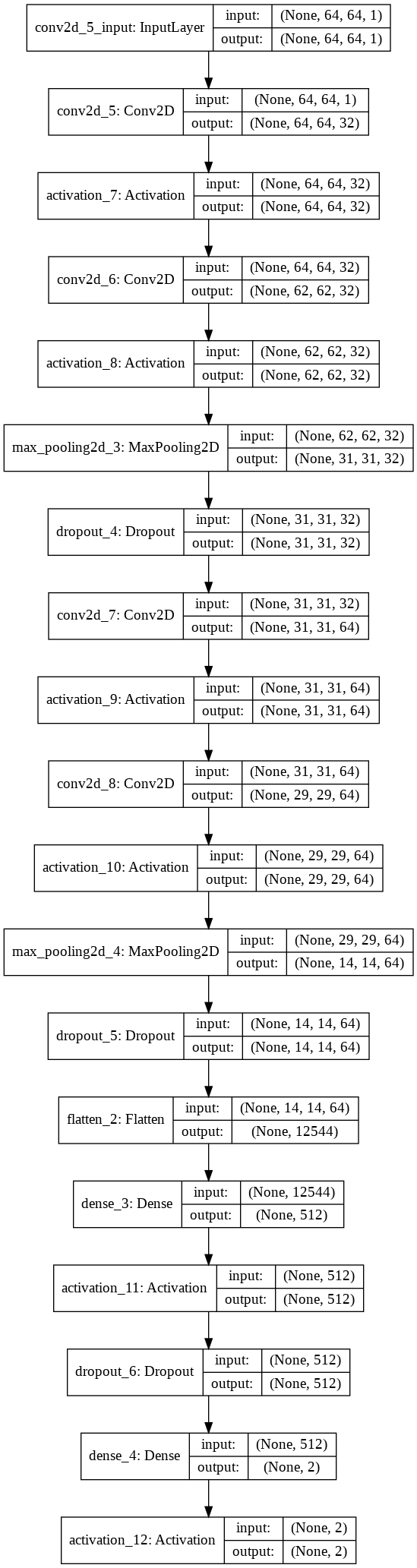

After the data collection, we need to train it. The Python3 script for training is shown. This is the Cifar-10 model, which has created a benchmark around the year 2015.

Script 2: A script in Python3 to train the data.We have trained it on Colab (google collaboratory) using the GPU runtime, by uploading the data in a zip file, unziping it there and downloading the trained .h5py model and testing it on our local machine. The most awesome thing about google collaboratory is that it is free, and uses NVIDIA Tesla T4 GPUs. After downloading the .h5py file, which was trained in a few minutes, we used this script to let the pong AI run in our local machine.

Script 3: A script in Python3 to run the AI on local machine.Conclusions

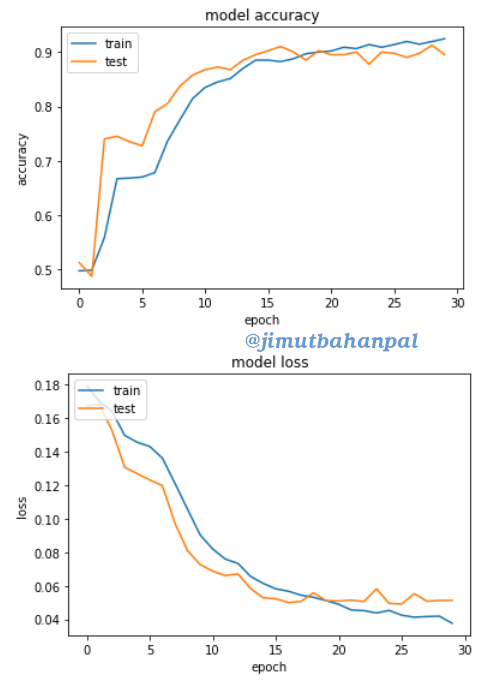

When training the AI for 2k images, we get the result as shown in Fig. 3. This result is pretty amazing, and gives over 85% accuracy on train and test set. Since this is only 2000 images, (for up action = 1K images and for down action= another 1K image) this doesn't fall in the domain of big data. When we are using 30K images, which very minutely falls in the domain of big data (very very minutely), we see how the model is actually affecting the train and test images as shown in Fig. 4.

Fig. 3: The results obtained by training 2k images.

Fig. 3: The results obtained by training 2k images.

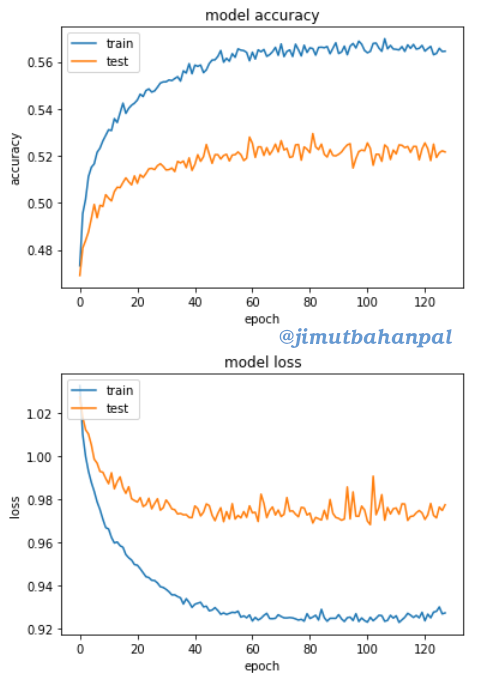

Fig. 4: The results obtained by training 30k images.

Fig. 4: The results obtained by training 30k images.

This shows that the game cannot perform good when we are training it with more data. The accuracy of about 56% on the train and 50% on the test data shows that it will not perform well, we need to use a different model. When the data is more, the model have to perform well, if it is not performing well, (when not giving at least 90% accuracy) we need to change our model (without thinking anything else).

Your task

We have seen that this model doesn't perform well in the case of Pong AI, but we haven't tried for Dino game as shown in Fig. 5 (which is inbuilt in google chrome browser). Your task (should you choose to accept it), shall be to implement this set up for dino game. From intuition we can almost tell the next move by looking at the picture of the dino game, but it may happen that the speed increases over time so, the CNN AI is not able to determine the time for jump when it has not looked the future. For that I have build this game named as jump and roll 2D. This is a dino clone, but with constant speed. The CNN shall give over 90% accuracy for this game, and shall be able to perform satisfactory. The only problem with this game is it is very hard to collect the data for such a booring game like this. But nonetheless, you can use the classic Dino game inbuilt in chrome to make the AI work for only "day time".

Fig. 5: The famous Dino Game, look ma, no internet!

Fig. 5: The famous Dino Game, look ma, no internet!

It is bound to give satisfactory result. When done mail me your result! Till then, happy learning!

#Update:

After working with the data that I collected by playing a hacked version (minimal) of the Google's Dino Game, I got some satisfactory results. The result wasn't perfect because of the lag in my PC to collect the data of the screenshot at the moment when I pressed the "Jumping action" (spacebar or uparrow). But, nonetheless, lets see the video of the dino game in action:

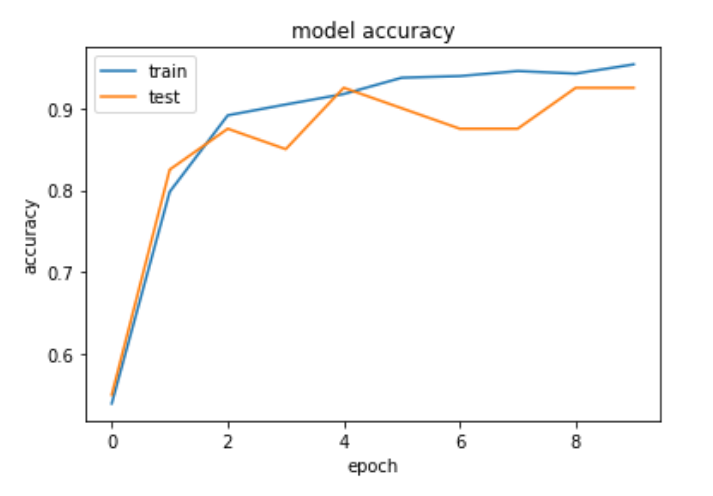

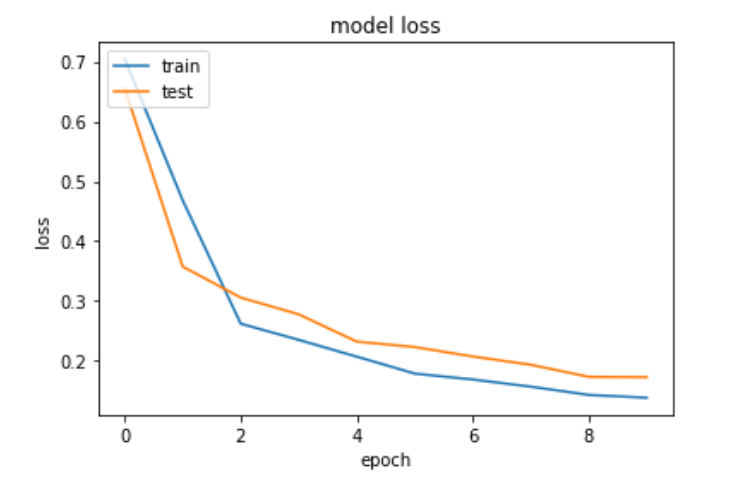

Even from stats perspective we can see the accuracy and error in the following figures.

Fig. 6: The accuracy of Dino AI obtained by training 2k images.

Fig. 6: The accuracy of Dino AI obtained by training 2k images.

Fig. 7: The error of Dino AI obtained by training 2k images.

Fig. 7: The error of Dino AI obtained by training 2k images.

Finally our implementation of the model is shown in Fig. 8.

Fig. 8: Our implementation of the CIFAR-10 model.

Fig. 8: Our implementation of the CIFAR-10 model.

References

1. Jimut Bahan Pal, Shalabh Agarwal, “Real Time Object Detection Can be Embedded on Low Powered Devices”, International Journal of Computer Sciences and Engineering, Vol.7, Issue.2, pp.1005-1009, 2019.

Playing a class of games using CNN

Playing a class of games using CNN